LightningBug ONE, the dataset

[LINK TO DATA (COMING SOON), MEANWHILE SAMPLE DATA]

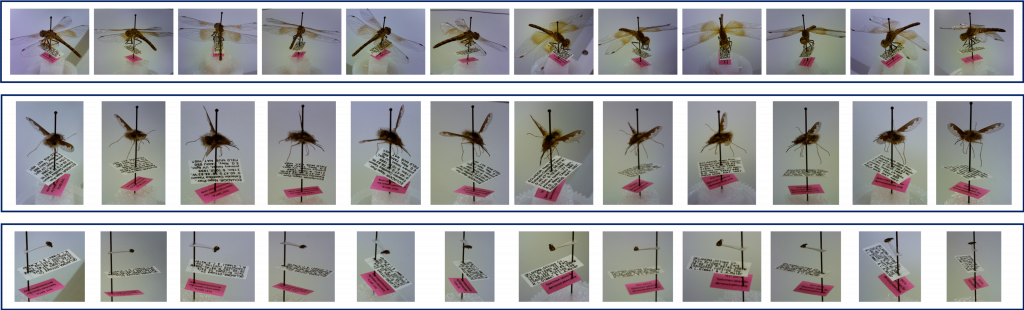

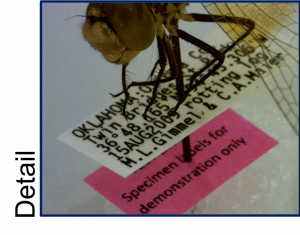

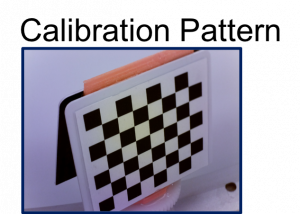

LightningBug ONE is a multi-view snapshot dataset for development of automated digitization algorithms. It consists of 6000 specimen snapshots interleaved with 400 snapshots of the standard calibration chessboard (Fig. 3). As seen in Fig. 1, a snapshot is comprised of an image from each of the 12 cameras. Each of the 15 specimens was captured 400 times, once on each circuit of the FlyWheel (see below), at different axial orientation angle. The average throughput of 1000 specimens per hour resulted from an initial throughput of 1400 specimens per hour with a slow degradation in throughput caused by filesystem performance as the size of the aggregate object store increased. The initial (peak) throughput was limited by the time to capture each snapshot and position the next specimen.

The object store contains the images from each camera with metadata including time stamp, snapshot identification, camera number, sequence identification, snapshot number within sequence. The data is available by direct query to the Shock server through a RESTful interface. Typical multi-view snapshots are shown in Fig. 1. Each of the original images has been cropped around the specimen. They have been arranged in order of increasing azimuth from left to right. The alternating elevation of the camera pose can be discerned as well, beginning with high elevation in the upper left panel.

Demonstrating mass-digitization at speed

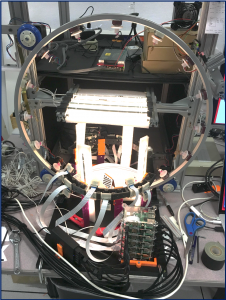

BugSnapper: We have designed, built and tested a prototype snapshot 3D digitization station to allow rapid capture of multi-view imagery for automated capture of pinned insect specimens and labels. It consists of twelve very small and light 8-megapixel cameras, each controlled by a small dedicated computer (Fig. 4). The cameras are arrayed around the target volume, six on each side of the sample feed path. Their positions and orientations are fixed by a 3D-printed scaffolding designed for the purpose. The twelve camera controllers and a master computer are connected to a dedicated high-speed data network over which all of the coordinating control signals and returning images and metadata are passed. The system is integrated with a high-performance object store that includes a database for metadata and the archived images comprising each snapshot. The system is designed so that it can be readily extended to include additional or different sensors.

FlyWheel: The station is meant to be fed with specimens by a conveyor belt whose motion is coordinated with the exposure of the multi-view snapshots. In order to test the performance of the system we added a recirculating specimen feeder designed expressly for this experiment.

LightningBug ONE: With the FlyWheel integrated into the system (Fig. 5) in place of a conventional conveyor belt we are able to provide a continuous stream of targets for the digitization system to facilitate long tests of its performance and robustness. We demonstrated its ability to capture data at a peak rate of 1400 specimens per hour and an average rate of 1000 specimens per hour over the course of a sustained 6 hour run. The dataset collected in this experiment provides fodder for the development of algorithms for the offline reconstruction and automatic transcription of the label contents. It includes 6000 specimen and 400 calibration snapshots.